The brain - that's my second most favourite organ! - Woody Allen

The brain - that's my second most favourite organ! - Woody Allen

Against this, consider the time taken for each elementary operation: neurons typically operate at a maximum rate of about 100 Hz, while a conventional CPU carries out several hundred million machine level operations per second. Despite of being built with very slow hardware, the brain has quite remarkable capabilities:

| processing elements | element size | energy use | processing speed | style of computation | fault tolerant | learns | intelligent, conscious | |

|---|---|---|---|---|---|---|---|---|

| 1014 synapses | 10-6 m | 30 W | 100 Hz | parallel, distributed | yes | yes | usually | |

| 108 transistors | 10-6 m | 30 W (CPU) | 109 Hz | serial, centralized | no | a little | not (yet) |

As a discipline of Artificial Intelligence, Neural Networks attempt to bring computers a little closer to the brain's capabilities by imitating certain aspects of information processing in the brain, in a highly simplified way.

The brain is not homogeneous. At the

largest anatomical scale, we distinguish cortex, midbrain,

brainstem, and cerebellum. Each of these can be hierarchically

subdivided into many regions, and areas within each region,

either according to the anatomical structure of the neural networks within

it, or according to the function performed by them.

The brain is not homogeneous. At the

largest anatomical scale, we distinguish cortex, midbrain,

brainstem, and cerebellum. Each of these can be hierarchically

subdivided into many regions, and areas within each region,

either according to the anatomical structure of the neural networks within

it, or according to the function performed by them.

The overall pattern of projections (bundles of neural connections) between areas is extremely complex, and only partially known. The best mapped (and largest) system in the human brain is the visual system, where the first 10 or 11 processing stages have been identified. We distinguish feedforward projections that go from earlier processing stages (near the sensory input) to later ones (near the motor output), from feedback connections that go in the opposite direction.

In addition to these long-range connections, neurons also link up with many thousands of their neighbours. In this way they form very dense, complex local networks:

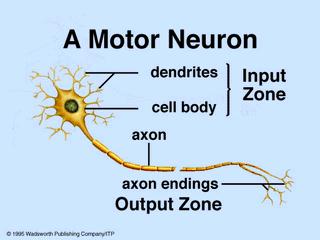

The basic computational unit in the nervous system is the nerve cell, or neuron. A neuron has:

A neuron receives input from other neurons (typically many thousands). Inputs sum (approximately). Once input exceeds a critical level, the neuron discharges a spike - an electrical pulse that travels from the body, down the axon, to the next neuron(s) (or other receptors). This spiking event is also called depolarization, and is followed by a refractory period, during which the neuron is unable to fire.

The axon endings (Output Zone) almost touch the dendrites or cell body of the next neuron. Transmission of an electrical signal from one neuron to the next is effected by neurotransmittors, chemicals which are released from the first neuron and which bind to receptors in the second. This link is called a synapse. The extent to which the signal from one neuron is passed on to the next depends on many factors, e.g. the amount of neurotransmittor available, the number and arrangement of receptors, amount of neurotransmittor reabsorbed, etc.

Brains learn. Of course. From what we know of neuronal structures, one way brains learn is by altering the strengths of connections between neurons, and by adding or deleting connections between neurons. Furthermore, they learn "on-line", based on experience, and typically without the benefit of a benevolent teacher.

The efficacy of a synapse can change as a result of experience, providing both memory and learning through long-term potentiation. One way this happens is through release of more neurotransmitter. Many other changes may also be involved.

Hebbs Postulate:

"When an axon of cell A... excites[s] cell B and repeatedly or persistently takes part in firing it, some growth process or metabolic change takes place in one or both cells so that A's efficiency as one of the cells firing B is increased."

Bliss and Lomo discovered LTP in the hippocampus in 1973

Points to note about LTP:

The following properties of nervous systems will be of particular interest in our neurally-inspired models:

Further surfing: The Nervous System - a great introduction, many pictures